Reddit Deepfakes How to Know When You Cant Train Anymore

The snapshots higher up look like people you'd know. Your girl'due south best friend from college, perhaps? That guy from homo resources at work? The emergency-room physician who took care of your sprained ankle? 1 of the kids from downwardly the street?

Nope. All of these images are "deepfakes" — the nickname for reckoner-generated, photorealistic media created via cutting-edge artificial intelligence technology. They are just ane example of what this fast-evolving method can practise. (Yous could create synthetic images yourself at ThisPersonDoesNotExist.com.) Hobbyists, for instance, have used the same AI techniques to populate YouTube with a host of startlingly lifelike video spoofs — the kind that show real people such equally Barack Obama or Vladimir Putin doing or saying goofy things they never did or said, or that revise famous pic scenes to requite actors like Amy Adams or Sharon Stone the face up of Nicolas Muzzle. All the hobbyists need is a PC with a high-end graphics chip, and perchance 48 hours of processing time.

Information technology's good fun, not to mention jaw-droppingly impressive. And coming downwardly the line are some equally remarkable applications that could make quick work out of in one case-painstaking tasks: filling in gaps and scratches in damaged images or video; turning satellite photos into maps; creating realistic streetscape videos to train democratic vehicles; giving a natural-sounding voice to those who have lost their ain; turning Hollywood actors into their older or younger selves; and much more.

Deepfake artificial-intelligence methods can map the face of, say, player Nicolas Cage onto anyone else — in this instance, actor Amy Adams in the motion-picture show Man of Steel.

CREDIT: MEMES COFFEE

Yet this technology has an obvious — and potentially enormous — dark side. Witness the many denunciations of deepfakes every bit a menace, Facebook's decision in Jan to ban (some) deepfakes outright and Twitter's announcement a month later that it would follow adjust.

"Deepfakes play to our weaknesses," explains Jennifer Kavanagh, a political scientist at the RAND Corporation and coauthor of "Truth Decay," a 2018 RAND report about the diminishing part of facts and data in public discourse. When we come across a doctored video that looks utterly existent, she says, "it's really hard for our brains to disentangle whether that's true or fake." And the internet existence what it is, at that place are whatever number of online scammers, partisan zealots, land-sponsored hackers and other bad actors eager to accept advantage of that fact.

"The threat here is not, 'Oh, we have fake content!'" says Hany Farid, a computer scientist at the University of California, Berkeley, and author of an overview of image forensics in the 2019 Annual Review of Vision Science. Media manipulation has been around forever. "The threat is the democratization of Hollywood-style applied science that can create really compelling simulated content." Information technology's photorealism that requires no skill or try, he says, coupled with a social-media ecosystem that can spread that content around the globe with a mouse click.

Digital image forensics expert Hany Farid of UC Berkeley discusses how artificial intelligence can create fake media, how information technology proliferates and what people tin practise to guard against it.

CREDIT: HUNNIMEDIA FOR KNOWABLE Mag

The technology gets its nickname from Deepfakes, an bearding Reddit user who launched the movement in Nov 2017 by posting AI-generated videos in which the faces of celebrities such as Scarlett Johansson and Gal Gadot are mapped onto the bodies of porn stars in activity. This kind of non-consensual glory pornography still accounts for nearly 95 percent of all the deepfakes out there, with most of the rest being jokes of the Nicolas Cage diversity.

But while the current targets are at least somewhat protected by fame — "People assume it'due south non actually me in a porno, even so demeaning it is," Johansson said in a 2018 interview — abuse-survivor advocate Adam Dodge figures that non-celebrities will increasingly be targeted, equally well. One-time-fashioned revenge porn is a ubiquitous feature of domestic violence cases as information technology is, says Contrivance, who works with victims of such abuse every bit the legal managing director for Laura's Firm, a nonprofit agency in Orange County, California. And now with deepfakes, he says, "unsophisticated perpetrators no longer require nudes or a sex activity tape to threaten a victim. They can simply manufacture them."

And then there's the potential for political corruption. Want to discredit an enemy? Indian journalist Rana Ayyub knows how that works: In Apr 2018, her face was in a deepfake porn video that went viral across the subcontinent, apparently because she is an outspoken Muslim woman whose investigations offended India's ruling party. Or how about subverting republic? Nosotros got a taste of that in the imitation-news and disinformation campaigns of 2016, says Farid. And in that location could be more to come. Imagine it'due south election eve in 2020 or 2024, and someone posts a convincing deepfake video of a presidential candidate doing or maxim something vile. In the hours or days it would have to expose the fakery, Farid says, millions of voters might get to the polls thinking the video is real — thereby undermining the outcome and legitimacy of the ballot.

Meanwhile, don't forget one-time-fashioned greed. With today's deepfake engineering science, Farid says, "I could create a fake video of Jeff Bezos saying, 'I'k quitting Amazon,' or 'Amazon's profits are downwardly 10 percent.' " And if that video went viral for even a few minutes, he says, markets could be thrown into turmoil. "Y'all could have global stock manipulation to the tune of billions of dollars."

And across all that, Farid says, looms the "terrifying landscape" of the post-truth globe, when deepfakes have become ubiquitous, seeing is no longer assertive and miscreants tin can bask in a whole new kind of plausible deniability. Torso-cam footage? CCTV tapes? Photographic bear witness of homo-rights atrocities? Audio of a presidential candidate boasting he can grab women anywhere he wants? "DEEPFAKE!"

Deepfake video methods can digitally alter a person's lip movements to match words that they never said. Equally office of an endeavor to grow awareness about such technologies through fine art, the MIT Center for Advanced Virtuality created a simulated video showing President Richard Nixon giving a voice communication about astronauts being stranded on the moon.

CREDIT: HALSEY BURGUND / MIT CENTER FOR ADVANCED VIRTUALITY

Thus the widespread concern about deepfake technology, which has triggered an urgent search for answers amid journalists, police investigators, insurance companies, man-rights activists, intelligence analysts and just about anyone else who relies on audiovisual evidence.

Among the leaders in that search has been Sam Gregory, a documentary filmmaker who has spent two decades working for WITNESS, a human-rights organization based in Brooklyn, New York. 1 of WITNESS' major goals, says Gregory, is to help people in troubled parts of the world accept advantage of dramatically improved cell-telephone cameras "to certificate their realities in ways that are trustworthy and compelling and prophylactic to share."

Unfortunately, he adds, "you tin't do that in this day and historic period without thinking about the downsides" of those technologies — deepfakes existence a prime number case. So in June 2018, WITNESS partnered with Showtime Draft, a global nonprofit that supports journalists grappling with media manipulation, to host one of the first serious workshops on the subject. Technologists, human-rights activists, journalists and people from social-media platforms developed a roadmap to set for a globe of deepfakes.

More WITNESS-sponsored meetings have followed, refining that roadmap down to a few key issues. One is a technical challenge for researchers: Find a quick and easy manner to tell trustworthy media from fake. Some other is a legal and economic challenge for large social media platforms such as Facebook and YouTube: Where does your responsibility prevarication? Afterward all, says Farid, "if we did not have a commitment mechanism for deepfakes in the form of social media, this would not be a threat that we are concerned nearly."

And for anybody there is the challenge of teaching — helping people understand what deepfake technology is, and what information technology can practise.

Kickoff, deep learning

Deepfakes take their roots in the triumph of the "neural networks," a one time-underdog form of artificial intelligence that has re-emerged to power today'south revolution in driverless cars, speech communication and paradigm recognition, and a host of other applications.

Although the neural-network thought can be traced back to the 1940s, it began to take agree merely in the 1960s, when AI was barely a decade old and progress was frustratingly slow. Little-seeming aspects of intelligence, such as recognizing a face or understanding a spoken word, were proving to be far tougher to plan than supposedly hard skills like playing chess or solving cryptograms. In response, a cadre of rebel researchers declared that AI should give up trying to generate intelligent behavior with high-level algorithms — then the mainstream approach — and instead practice so from the bottom up by simulating the encephalon.

To recognize what's in an paradigm, for example, a neural network would pipe the raw pixels into a network of false nodes, which were highly simplified analogs of encephalon cells known every bit neurons. These signals would then catamenia from node to node forth connections: analogs of the synaptic junctions that pass nerve impulses from one neuron to the next. Depending on how the connections were organized, the signals would combine and split as they went, until somewhen they would actuate one of a series of output nodes. Each output, in plough, would correspond to a loftier-level nomenclature of the image'due south content — "puppy," for case, or "eagle," or "George."

The payoff, advocates argued, was that neural networks could be much better than standard, algorithmic AI at dealing with real-earth inputs, which tend to be full of noise, distortion and ambivalence. (Say "service station" out loud. They're two words — but it'southward difficult to hear the boundary.)

And better notwithstanding, the networks wouldn't demand to be programmed, just trained. Simply show your network a few zillion examples of, say, puppies and not-puppies, like then many flash cards, and ask it to guess what each image shows. Then feed any wrong answers back through all those connections, tweaking each one to amplify or dampen the signals in a way that produces a better issue next time.

The earliest attempts to implement neural networks weren't terribly impressive, which explains the underdog status. But in the 1980s, researchers greatly improved the performance of networks past organizing the nodes into a series of layers, which were roughly analogous to different processing centers in the brain'due south cortex. Then in the paradigm example, pixel data would period into an input layer; and then be combined and fed into a second layer that contained nodes responding to simple features like lines and curves; and then into a third layer that had nodes responding to more complex shapes such as noses; so on.

Later, in the mid-2000s, exponential advances in calculator power allowed advocates to develop networks that were far "deeper" than earlier — meaning they could be built non just with i or ii layers, but dozens. The performance gains were spectacular. In 2009, neural network pioneer Geoffrey Hinton and 2 of his graduate students at the University of Toronto demonstrated that such a "deep-learning" network could recognize speech much meliorate than any other known method. Then in 2012, Hinton and two other students showed that a deep-learning network could recognize images better than whatever standard vision organisation — and neural networks were underdogs no more. Tech giants such as Google, Microsoft and Amazon quickly started incorporating deep-learning techniques into every product they could, as did researchers in biomedicine, loftier-energy physics and many other fields.

The neural-network approach to artificial intelligence is designed to model the encephalon'southward neurons and links with a web of simulated nodes and connections. Such a network processes signals by combining and recombining them as they flow from node to node. Early on networks were pocket-sized and limited. But today'southward versions are far more powerful, thanks to mod computers that can run networks both bigger and "deeper" than before, with their nodes organized into many more layers.

Nevertheless every bit spectacular as deep learning'south successes were, they well-nigh always boiled down to some grade of recognition or classification — for example,Does this image from the drone footage show a rocket launcher? It wasn't until 2014 that a PhD student at the University of Montreal, Ian Goodfellow, showed how deep learning could be used to generate images in a practical way.

Goodfellow's idea, dubbed the generative adversarial network (GAN), was to gradually meliorate an image's quality through contest — an ever-escalating race in which ii neural networks try to outwit each other. The process begins when a "generator" network tries to create a synthetic image that looks like it belongs to a detail ready of images — say, a big collection of faces. That initial attempt might be crude. The generator and then passes its effort to a "discriminator" network that tries to see through the deception: Is the generator's output fake, yes or no? The generator takes that feedback, tries to learn from its mistakes and adjusts its connections to practise better on the side by side wheel. But so does the discriminator — on and on they go, bike afterward cycle, until the generator's output has improved to the point where the discriminator is baffled.

The images generated for that first GAN paper were low-resolution and non ever convincing. Merely every bit Facebook'southward AI chief Yann LeCun afterwards put it, GANs were "the coolest idea in deep learning in the last 20 years." Researchers were soon jumping in with a multitude of variations on the thought. And along the mode, says Farid, the quality of the generated imagery increased at an amazing charge per unit. "I don't recollect I've e'er seen a engineering develop as fast," he says.

A generative adversarial network (GAN) learns to generate images by pitting two neural networks confronting each other. The generator network tries to create an paradigm that looks real, while a discriminator network tries to meet through the charade — and both attempt to learn from their mistakes. Later on enough cycles, the results can look startlingly realistic.

Make it till you faux information technology

What few researchers seemed to have anticipated, however, was that the malicious uses of their technology would develop just as rapidly. Deepfakes, the Redditor, kicked things off on November ii, 2017, with the launch of the subReddit /r/deepfakes equally a showcase for GAN-generated porn. Specifically, he announced that he was using open up-source AI software from Google and elsewhere to implement previously scattered academic research on face-swapping: putting one person's face onto another person's body.

Face up swapping was nothing new. But in conventional applications such as Photoshop, it had always been a digital cut-and-paste operation that looked pretty obvious unless the replacement face up had the same lighting and orientation equally the original. The GAN arroyo made such swapping seamless. Offset, the generator network would be trained on thousands of images and videos of, say, the film star Gadot, producing a 3D model of her head and face. Next, the generator could use this model to map Gadot's confront digitally onto the target, giving it the aforementioned alignment, expressions and lighting as an histrion in, say, an adult film. And then it would repeat the process frame by frame to make a video.

Deepfakes' initial results weren't quite skilful enough to fool a careful observer — but still gained a following. He presently had a host of imitators, specially after another anonymous individual packaged Deepfake's algorithm into self-contained "FakeApp" software, and launched information technology equally a free download in January 2018. Within a calendar month, sensing a lawsuit waiting to happen, Reddit had banned non-consensual deepfake porn videos outright — every bit did the world's largest porn sharing site, Pornhub. ("How bad do you take to exist to be banned by Reddit and Pornhub at the same fourth dimension?" says Farid.)

Still other sites were already coming online, and the flood connected — as did anxieties about the software's potential use in the fake-news era. Heightening those anxieties were two variations that predated Deepfakes' face-bandy software, and that many experts consider more threatening. "Boob master," a facial-reenactment approach pioneered by researchers at Stanford University and others in 2016 with the goal of improving facial motion-capture techniques for video games and movies, allowed an actor to impose her facial expressions on someone in, say, a YouTube video — in existent time. "Lip sync," pioneered at the Academy of Washington in 2017 with similar goals, could start with an existing video and change the targeted person's mouth movements to correspond to a simulated sound track.

A video describes ane of the technologies that could be used to make convincing deepfakes. This approach offers a manner to capture an actor's facial expressions and embed them on an existing video of another person.

CREDIT: Clan FOR Calculating Machinery (ACM)

Legitimate goals or not, these 2 techniques together could brand anyone say or do anything. In Apr 2018, film director Hashemite kingdom of jordan Peele dramatized the issue with a lip-synced simulated video of old President Obama giving a public-service announcement about the dangers of deepfakes on Buzzfeed. And later that same yr, when WITNESS' Gregory and his colleagues started working on the roadmap for dealing with the problem, the issue took the form of a technical challenge for researchers: Detect a simple way to tell trustworthy media from false.

Detecting forgeries

The good news is that this already was a research priority well before deepfakes came forth, in response to the surge in low-tech "cheapfakes" made with non-AI-based tools such as Photoshop. Many of the counter-techniques accept turned out to apply to all forms of manipulation.

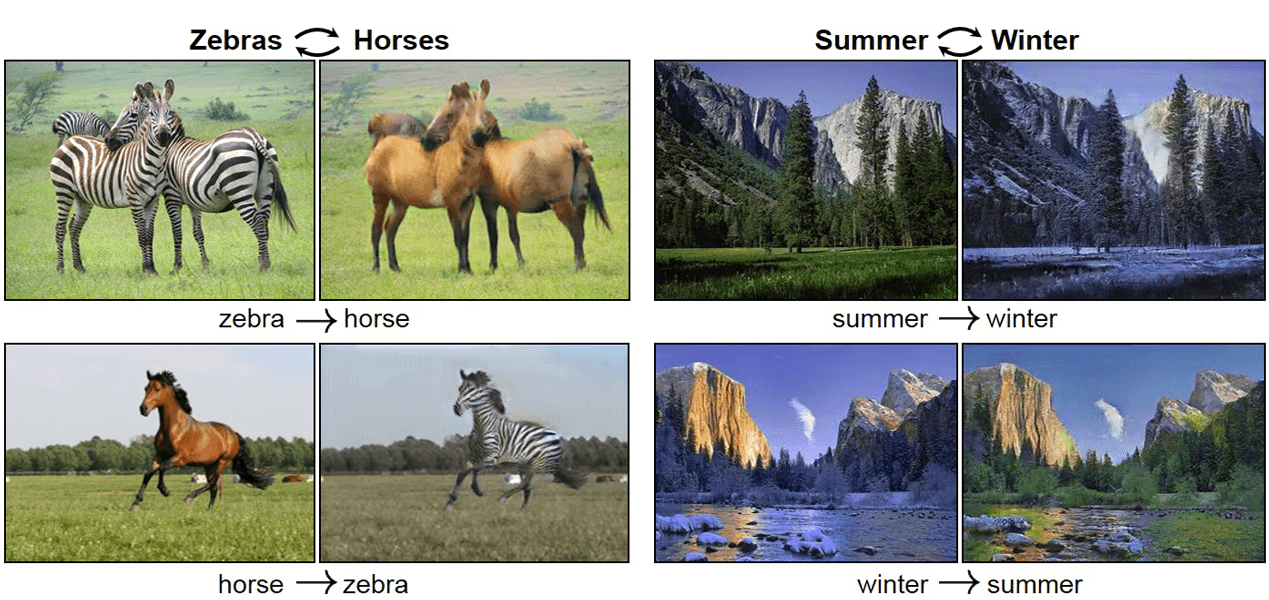

One arroyo uses generative adversarial network techniques to turn real images into altered ones. The program chosen CycleGAN transforms horses into zebras, winter into summer and vice versa.

CREDIT: J. ZHU ET AL / ICCV 2017

Broadly speaking, the efforts to engagement follow 2 strategies: authentication and detection.

With authentication — the focus of startups such as Truepic in La Jolla, Calif., and Bister Video in San Francisco — the idea is to give each media file a software badge guaranteeing information technology has not been manipulated.

In Truepic'due south case, says visitor vice president Mounir Ibrahim, the software looks like any other smartphone photo app. But in its inner workings, he says, the app inscribes each prototype or video it records with the digital equivalent of a DNA fingerprint. Anyone could then verify that file's authenticity past scanning it with a known algorithm. If the scan and fingerprint do non match, information technology means the file has been manipulated.

To safeguard confronting fakery of the digital fingerprints, the software creates a public tape of each fingerprint using the blockchain technique originally developed for cryptocurrencies similar Bitcoin. "And what that does is shut the digital concatenation of custody," says Ibrahim. Records in the blockchain are effectively un-hackable: They are distributed across computers all over the globe, yet are so intertwined that you couldn't change one without making impossibly intricate changes to all the others simultaneously.

Amber Video'south approach is broadly similar, says company CEO Shamir Allibhai. Like Ibrahim, he emphasizes that his company is not associated with Bitcoin itself, nor dependent on cryptocurrencies in any manner. The important thing almost a blockchain is that information technology's public, distributed and immutable, says Allibhai. Then you could share, say, a 15-minute segment of a surveillance video with police, prosecutors, defense attorneys, judges, jurors, reporters and the general public — beyond years of appeals — and all of them could confirm the prune's veracity. Says Allibhai: "You only demand to trust mathematics."

Skeptics say that a blockchain-based authentication scheme isn't likely to become universal for a long fourth dimension, if e'er; it would essentially require every digital camera on the planet to comprise the same standard algorithm. And while it could become viable for organizations that can insist on authenticated media — think courts, police departments and insurance companies — it doesn't do anything about the myriad digital images and videos already out at that place.

Thus the second strategy: detecting manipulations after the fact. That'due south the approach of Amsterdam-based startup DeepTrace, says Henry Ajder, head of threat intelligence at the visitor. The trick, he says, is not to get hung up on specific image artifacts, which can modify rapidly every bit engineering evolves. He points to a archetype case in 2018, when researchers at the State University of New York in Albany plant that figures in deepfake videos don't blink realistically (mainly because the images used to railroad train the GANs rarely bear witness people with their eyes close). Media accounts hailed this as a foolproof way of detecting deepfakes, says Ajder. But just every bit the researchers themselves had predicted, he says, "within a couple of months new fakes were being developed that were perfectly good at blinking."

A better detection approach is to focus on harder-to-false tells, such every bit the ones that arise from the manner a digital camera turns lite into $.25. This process starts when the camera's lens focuses incoming photons onto the digital equivalent of film: a sensor chip that collects the light energy in a rectangular grid of pixels. As that happens, says Edward Delp, an electrical engineer at Purdue University in Indiana, each sensor leaves a signature on the prototype — a subtle blueprint of spontaneous electrical static unique to that chip. "You lot can exploit those unique signatures to determine which camera took which picture show," says Delp. And if someone tries to splice in an object photographed with a different camera — or a face synthesized with AI — "we can detect that there is an anomaly."

Similarly, when algorithms similar JPEG and MPV4 compress prototype and video files to a size that tin exist stored on a digital photographic camera's memory fleck, they produce feature correlations between neighboring pixels. So again, says Jeff Smith, who works on media forensics at the Academy of Colorado, Denver, "if you change the pixels due to confront swapping, whether traditional or deepfake, you've disrupted the relationships between the pixels." From there, an investigator tin build up a scan of the image or video to show exactly what parts have been manipulated.

Unfortunately, Smith adds, neither the noise nor the pinch technique is bulletproof. One time the manipulation is done, "the next step is uploading the video to the internet and hoping it goes viral." And as before long equally that happens, YouTube or another hosting platform puts the file through its own compression algorithm — as does every site the video is shared with after that. Add up all those compressions, says Smith, "and that can wash out the correlations that we were looking for."

That's why detection methods likewise have to look beyond the pixels. Say y'all take a video that was recorded in daylight and want to bank check whether a auto shown was really in the original. The perspective could be off, Smith says, "or at that place could be shadows within the motorcar prototype that don't friction match the shadows in the original image." Whatsoever such violation of basic physics could exist a red flag for the detection system, he says.

In add-on, says Delp, you could bank check an prototype's context against other sources of knowledge. For case, does a rainy-day video'south digital timestamp match up with weather-service records for that alleged identify and fourth dimension? Or, for a video shot indoors: "We can extract the 60-hertz line frequency from the lights in the room," Delp says, "and so determine geographically whether the identify you say the video is shot is where the video was actually shot." That'south possible, he explains, because the ever-irresolute rest of supply and demand means that the power-line frequency is never precisely threescore cycles per second, and the power companies keep very conscientious records of how that frequency varies at each location.

Or maybe you lot have a video of someone talking. One way to tell if the speaker has been deepfaked is to wait at their unconscious habits — quirks that Farid and his colleagues have found to be as individual as fingerprints. For example, says Farid, "when President Obama frowns, he tends to tilt his head downward, and when he smiles he tends to plough his head upwardly into the left." Obama also tends to have very animated eyebrows. "Senator [Elizabeth] Warren, on the other hand, tends to not smile a lot, but moves her head left and right quite a bit." And President Donald Trump tends to be united nations-blithe except for his chin and mouth, says Farid. "They are extremely animated in a very item fashion."

Farid and his colleagues accept analyzed hours upon hours of videos to map those quirks for diverse earth leaders. So if anyone tries to create a deepfake of one of those leaders, he says, "whether it'south a face swap, a lip sync or a puppet master, those properties are disrupted and we tin notice it with a fair degree of accurateness."

Non by technology solitary

To spur the development of such technologies, tech companies such as Amazon, Facebook and Microsoft have joined with WITNESS, First Draft and others to launch a Deepfake Detection Claiming: Participants submit code that will be scored on how well it tin detect a set of reference fakes. Cash prizes range up to $500,000.

In the meantime, though, there is a widespread consensus in the field that applied science will never solve the deepfake challenge by itself. Most of the problems that have surfaced in the WITNESS roadmap exercises are not-technological. What'south the all-time way of raising public awareness, for example? How do you lot regulate the technology without killing legitimate applications? And how much responsibility should be borne past social media?

In April 2018, director Jordan Peele worked with Buzzfeed Media to demonstrate the ability of deepfakes. They created a video of former President Barack Obama (voiced by Peele) giving a public-service annunciation about the dangers of deepfakes — an announcement that Obama never made.

CREDIT: BUZZFEEDVIDEO

Facebook partially answered that concluding question on January half-dozen, when it announced it would ban whatsoever deepfake that was intended to deceive the viewers. It remains to exist seen how effective that ban will be. And in any instance, there are other platforms still to be heard from, and many issues still unresolved.

On the technological front end, should the platforms start demanding a valid authentication tag for each file that's posted? Or failing that, do they have a responsibility to bank check each file for signs of manipulation? Information technology won't be long earlier the detection algorithms are fast enough to keep upward, says Delp. And the platforms already do all-encompassing vetting to check for copyright violations, as well as for illegal content such equally child pornography, so deepfake checks would fit right in. Alternatively, the platforms could go the crowdsourcing road by making the detection tools available for users to flag deepfakes on their own — complete with leaderboards and bounties for each 1 detected.

In that location'due south a gnarlier question, though — and ane that gets defenseless upwardly in the larger contend over fake news and disinformation in general: What should sites do when they discover bogus posts? Some might exist tempted to go the libertarian route that Facebook has taken with deceptive political ads, or not-AI video manipulation: Only label the known fakes and let the viewers beware.

Unfortunately, that overlooks the harm such a post can do — given that a lot of people will believe it'due south real despite the "fake" label. Should, then, sites have down deepfakes as presently as they are discovered? That'southward pretty clear-cut when it comes to non-consensual porn, says Farid: Not only exercise almost mainstream sites ban pornography of any kind, but even those that don't can see in that location's a specific person being hurt. Just there is a reason that Facebook's ban carves out a large exception for Nicolas Muzzle-mode joke videos, and deepfakes that are clearly intended as satire, parody or political commentary. In those cases, says Farid, "the legislative and regulatory side is complex because of the free-speech issue."

A lot of people are afraid to touch this question, says Kavanagh, the RAND political scientist. Peculiarly in the Silicon Valley culture, she says, "there'south frequently a sense that either nosotros take no regulation, or we have a Ministry building of Truth." People aren't thinking about the vast grey area in betwixt, which is where past generations hammered out workable ways to manage print newspapers, radio and television. "I don't necessarily take a expert answer to what we should do about the new media surround," Kavanagh says. "Just I do think that the gray areas are where we need to accept this conversation."

Source: https://knowablemagazine.org/article/technology/2020/synthetic-media-real-trouble-deepfakes

0 Response to "Reddit Deepfakes How to Know When You Cant Train Anymore"

Post a Comment